From my software development experience, there are a few different words for the generic notion of a “problem”.

A bug or a defect, for example, is defined as the following from Wikipedia:

A software bug is the common term used to describe an error flaw, mistake, failure, or fault in a computer program or system that produces an incorrect or unexpected result, or causes it to behave in unintended ways. Most bugs arise from mistakes and errors made by people in either a program’s source code or its design, and a few are caused by compilers producing incorrect code.

An issue goes a level higher – a bug is an issue, but an issue might not be a bug. Wikipedia says:

In computing, the term issue is a unit of work to accomplish an improvement in a system. An issue could be a bug, a requested feature, task, missing documentation, and so forth. The word “issue” is popularly misused in lieu of “problem.” This usage is probably related.

Where am I going with all of this?

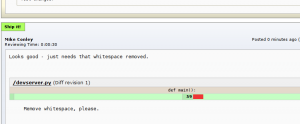

Well, remember when I said I was going to add defect reporting/tracking capabilities to Review Board? I asked for some feedback on my UI mockups on the developer mailing list, and an interesting conversation on terminology erupted.

Anyhow, the long and the short of it is – we’re going to be calling “problems still existing within a review request revision” issues. And this is distinct from the sort of thing that might show up in an issue tracker.

Maybe down the line, we’ll have a way for administrators to set their own word for it. From the thread, it sounds like everybody and their brother has their own favourite terminology. “Issue” will have to do for now.

Thanks again to everyone on the list who contributed to the conversation.